A lack of or a substantially diminished quality of human control is often understood as the major problem associated with military AI. The US Department of Defense (DoD) ‘Directive 3000.09’ that was released in 2012 as one of the first political documents on autonomy in weapon systems, for example, states in its updated version from 2023 that “semi-autonomous weapon systems” require that “that operator control is retained over the decision to select individual targets and specific target groups for engagement” (p .23).

In the same vein, the non-governmental organisation Article 36 introduced the concept of “meaningful human control” in 2013. It argued here that “[i]t is the robust definition of meaningful human control over individual attacks that should be the central focus of efforts to prohibit fully autonomous weapons” (p. 3), while the US continuously prefers to use “appropriate levels of human judgment over the use of force” as a conceptual definition introduced in 2012. Human control is overall taken as the main benchmark and solution to the many problems posed by increasing algorithmic autonomy. The AutoNorms team has already underlined in its publications that human control might not keep the promise of being meaningful (see Bode and Watts on meaningless human control).

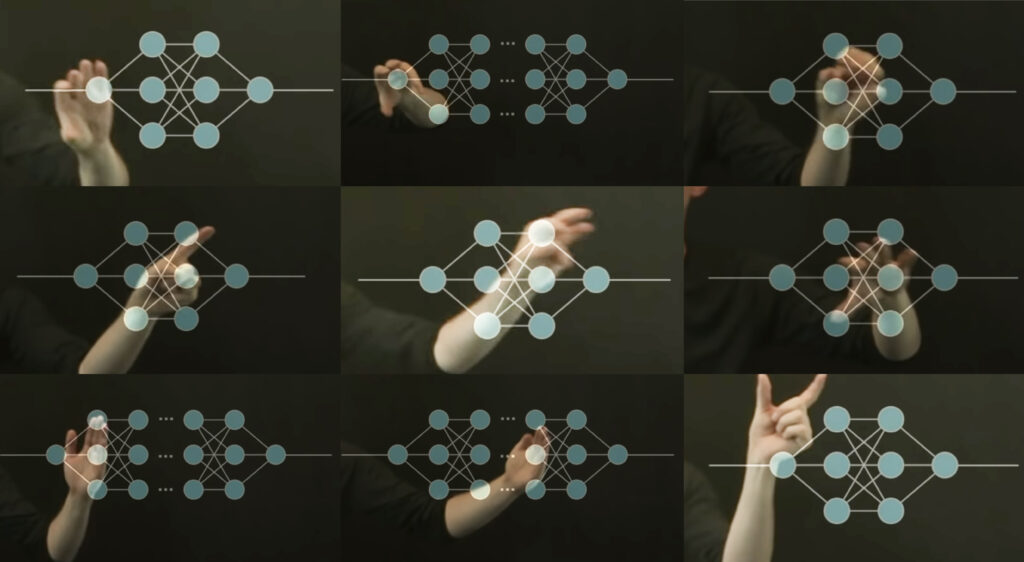

While significant academic as well as political-public attention has therefore been directed at the question of what full autonomy in military technology implies in terms of risks and opportunities, much less attention has been given to considerations of how human control works when teaming with AI. At the same time, actors such as the US military are launching initiatives to circumvent the perceived loss of human control by exploring new angles, for example by improving the way AI applications can explain their decisions to human users in operational settings. Among these are the explainable AI programme (AIX) by the US Defense Advanced Research Projects Agency (DARPA) that ran from 2017 to 2021. The goal of AIX was inter alia to “Enable human users to understand, appropriately trust, and effectively manage the emerging generation of artificially intelligent partners”.

In general, making AI that is trustworthy is one of the major civilian and miliary initiatives in the context of legal-ethical considerations surrounding AI developments. Actors such as the EU are active in adopting relevant regulations for the civilian sector. While the work on AI guidelines is hence proceeding and raises some regulatory expectations, the practical implications for the military domain remain widely unconsidered. In other words, the operationalisation of developing and eventually using “trustworthy” AI in a military scenario, such as in combat, is in a very early phase. A review of AIX after the programme’s termination concluded that there were major challenges to be tackled, adding that there was currently no universal solution to explainable AI. A central finding of this review was also that “different user types require different types of explanations. This is no different from what we face interacting with other humans”.

But the debate about autonomous weapon systems (AWS), which takes human control as a default solution, has not yet engaged in more in-depth discussions about its conception of the human in control. In fact, there seems to be a one-dimensional, abstract concept of “human” prevailing, where the human just needs to be added to the technological-based decision-making loop to ensure control. However, a range of socio-cultural factors strongly impact the quality of human control. Among these are technical aspects such as user interfaces, procedural aspects such as training, as well as social aspects such as skills and knowledge as well as role images.

Moreover, country-specific factors such as military culture or viewing technology as rational/progressive or as malicious/uncontrollable, for instance, could influence trust and distrust. This is especially important as the most critical use of AI concerns situations where extreme forms of physical harm could occur, including decisions about life and death. These situations are characterised by human time constraints, data overload, as well as physical and mental stress. The US military is therefore particularly interested in finding an “appropriate” solution to the trust problem. Quintessentially, the soldier as a human operator (and abstract control instance) works most efficiently with AI if the default mode is a relationship of de facto trust instead of control. Arguably, making AI more trustworthy does not necessarily offer a technological solution to the challenge of making military AI more reliable or ethically/legally less problematic in situations where it “acts” autonomously without human intervention. Instead, it offers a technological solution to the social problem of being unwilling to team with AI that acts outside of human control by incorporating elements that increase human trust.

The most recent major initiative by DARPA in this regard, “AI Forward”, was launched in early 2023 and focusses on trustworthy AI. AI Forward kicks off with workshops in the summer of 2023 where “participants will have an opportunity to brainstorm new directions towards trustworthy AI with applications for national security”.

Meanwhile, the DoD has recently highlighted aspects such as responsible and reliable AI with the adoption of Ethical Principles for Artificial Intelligence in 2020 and the “Responsible Artificial Intelligence Strategy and Implementation Pathway” in 2022. The shift towards operationalising reliable AI via the concept of trustworthy AI is significant, as the question of trust introduces a terminology that is even more socially connotated than reliable technology. However, as was outlined in the 2022 Strategy, “RAI [Responsible AI] is a journey to trust. It is an approach to design, development, deployment, and use that ensures the safety of our systems and their ethical employment” (p. 6). The vague explanation of how RAI translates into trust is symptomatic of the current attempts to address the ethical and legal challenges of using military AI. At the declaratory level, policymakers produce different statements, guidelines, and position papers presenting concepts (or rather keywords) that are supposed to inform the life cycle of military AI, the gap to the operational level remains wide open. There are not yet convincing and uncontested solutions to the aforementioned challenges when it comes to using AI in complex and difficult situations that are typical for warfare.

The most recent DARPA initiatives should be understood as further attempts to close this gap. However, the focus on trustworthy AI initially raises more questions than it answers. The requirement of trust arguably goes beyond reliability, and the trust in AI in life and death situations is at the heart of core concerns discussed by the international debate on AWS for a decade. The explainable AI project as well as the objectives of AI Forward suggest that US military will turn more to considering how the operational level can provide standards of “appropriate” use in practice. The theoretical foundations of the AutoNorms project have highlighted the importance of this analytical level from the onset. It therefore continues to promise important empirical insights in the emergence of new norms in the context of AWS.