The development, deployment and use of autonomous weapons systems (AWS) is a subject of growing scholarly debate. China is no exception to this trend. To provide a better understanding of Chinese perceptions of weaponised Artificial Intelligence (AI), this article briefly examines relevant Chinese-language sources available from the Knowledge Resource Integrated Database of the China National Knowledge Infrastructure (CNKI). CNKI is the largest, most comprehensive, and most-accessed platform of Chinese journals, books, theses, conference proceedings and newspapers. Examining CNKI enables a greater understanding of Chinese thinking about weaponised AI. This is of critical value to policymakers and practitioners working in the area of AI regulation and arms control who wish to better communicate and cooperate with China.

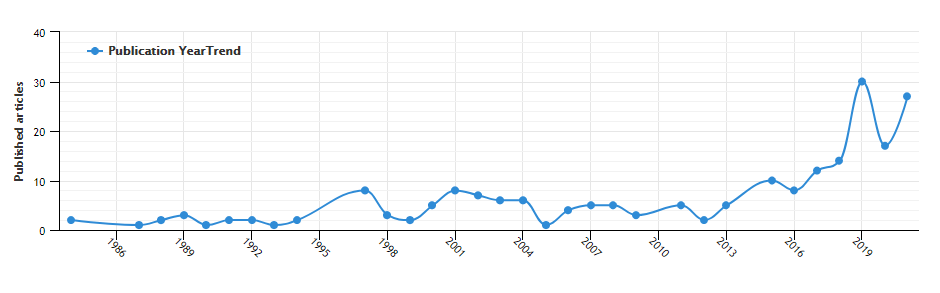

Chinese research on the military application of AI technologies dates back to the late 1980s, which were usually discussed under the banner of intelligent weapons (智能武器). Figure 1 below shows the number of publications on this subject within the CNKI database. The first two spikes of studies on intelligent weapons occurred during the mid-1990s and the early 2000s (see, e.g., Li, M. 1998; Li, J. 2000; Zhou, 2002). The content of the articles and books from these periods is primarily focused on the development of more autonomous weapons systems in other countries. Particular attention was given to the weapons deployed by the United States (US) in the Gulf War and in its invasions of Afghanistan and Iraq. In these texts, there is very little reflection on the domestic development of these technologies. Since then, Chinese analysts have predicted that intelligentised warfare (智能化战争) that characterizes ‘algorithm confrontation’ would become the prevailing form of war. Intelligentised warfare is defined as the fifth stage of warfare in Chinese conceptions of military operations, after the stages of “cold weapon warfare” (冷兵器战争), “hot weapon warfare” (热兵器战争), “mechanised warfare” (机械化战争), and “informationised warfare” (信息化战争). Despite expectations that robots may complement humans both physically and mentally in future combat, most scholars argue that robots will not replace humans completely and winning in warfare requires an optimised combination of command/control personnel and intelligent weapons.

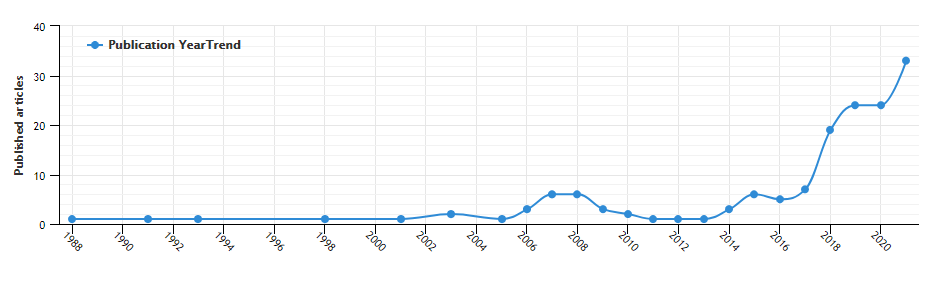

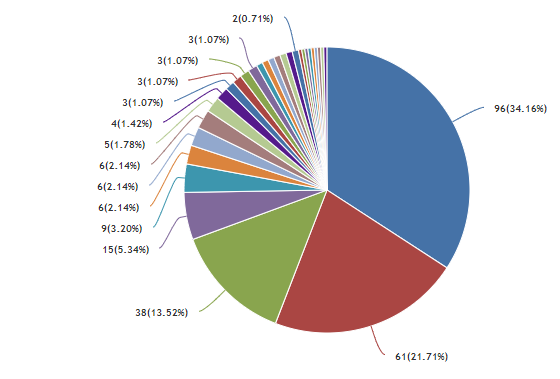

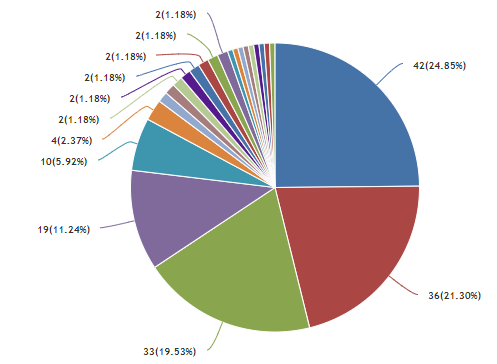

From 2013 onwards, as international debate on lethal autonomous weapons systems (LAWS) gained momentum under the framework of the United Nations Convention on Certain Conventional Weapons (CCW), there was a starker increase in the study of weaponised AI in China (see Figure 2). Moreover, an increasing number of studies specifically use the term autonomous weapons (自主武器) or autonomous weapons systems (自主武器系统), consistent with the nomenclature used in international discussions on the subject. From around 2013 onwards, articles on “autonomous weapons” have mainly been written from the perspective of those working within the discipline of international law. Interestingly, this is different from articles on “intelligent weapons” which have been mainly written by those working in the fields of military affairs and technology (see Figures 3 & 4). Intelligent weapons can be broadly interpreted as weapons systems that leverage AI in selecting and engaging targets. Autonomous weapons carry all the traits of intelligent weapons but also embody a certain level of independence from human agents in operations. In this sense, autonomous weapons can be considered a sub-category of intelligent weapons. The different terminologies used in these respective fields of research possibly reflects a growing consideration of not only the potential military consequences of weaponised AI but the legal approaches to mitigate potential harms.

One striking observation regarding Chinese narratives around AWS between 2013 to 2017 is a generally optimistic outlook on technological developments. This outlook features proportionately fewer critics of greater autonomy in weapons systems than is the case with English-language literature published during the same period. There is a common perception that the development and use of AI provides a window of opportunity for China to “overtake” other military powers and shift from being a geopolitical “follower” to a “leader”. For example, Chen Dongheng of the Academy of Military Sciences of People’s Liberation Army claimed that China should leverage its advantage in AI technology to promote military applications of AI in order to achieve the “goal of building a strong military in the new era and building a world-class military”. This is consistent with the rhetoric of the Chinese government.

Nevertheless, after 2017, China’s academic circle has begun to diverge on whether the further weaponisation of AI will present more challenges than opportunities for China. There are clear signs of a growing criticism regarding the safety and ethical risks associated with the development and deployment of AWS, as seen from the rising number of relevant articles in the scholarship on autonomous weapons. Numerous scholars have introduced debates within the CCW process, analysing the potential incompatibility of LAWS with international (humanitarian) law, especially for the principles of distinction and proportionality. Concerns have also been raised about the challenges brought about by LAWS for attributing the responsibility for misconduct in military operations (e.g., Zhang, W. 2019; Zhang, H. and Du, 2021). Chinese writers have increasingly begun to perceive AWS as a double-edged sword. Some portray AWS in a more negative light, for example, as a source of international instability and a challenge to humanity. A few scholars urge for putting stricter restrictions on the research, trade, deployment and usage of AWS, reserving the right to ”pull the trigger” to human agents only in situations where military force is in use (e.g., Dong, 2018; Wu, 2018). Some have discussed the roles that humans should play in human-machine interaction, for example, as a combination of “operational controller, protector against failure, and moral judge”. Some scholars argue humans are nonetheless the decisive factor in defeating adversaries despite technological advances, citing Marxist theories of war that place emphasis on organising, mobilising and arming people on all fronts.

It is worth noting that a focus on great-power competition appears to have factored significantly into Chinese writings on AWS. Chinese academics and military analysts remain deeply sceptical about the effectiveness of CCW processes in slowing the development of AWS in other countries given the profound military advantages they promise (Liu, Y. 2018). Some believe that AWS should not be developed but harbour serious concerns that the trend towards integrating greater autonomy in weapons systems is unavoidable. Many scholars perceive the security situation that China is facing in more realist terms, especially in view of intensified US-China competition. These scholars tend to believe that China should continue to pursue the development of AWS to prevent a worst-case scenario in which advanced AWS are developed in adversarial countries that put China at a strategic disadvantage (Liu, Y. 2018).

Looking ahead, numerous interlocutors call on the Chinese government to actively participate in, and come to lead, the discussions about the arms control of LAWS under the CCW framework. This comes from a belief that the CCW process is the best forum for exploring possible methods of “embedding human ethics and morality into autonomous systems” (e.g., Long and Xu, 2019). Some of the same articles discuss the ways in which China should compete for international prestige and leadership (e.g., Chen and Zhu, 2020; Zhang, Q. and Yang, 2021). Possible methods discussed include establishing a mandatory legal review system of AWS; setting up the principle of meaningful human control for a state responsibility regime; and creating a personal criminal responsibility regime on malicious research and development activities (e.g., Li, S. 2021).

What does all this mean for international cooperation against the potential abuses of military AI moving forward? This brief review hopefully provides outside observers the understanding that, similar to Western states, the prevention of the development and use of AI weapons that challenge human dignity has broad support among academics and analysts in China. State governments can leverage this common ground to enhance international cooperation on AI towards a direction that benefits all countries. Meanwhile, as discussed, a willingness to collectively work on challenges brought by AI is fragile given that national security considerations often take precedence. In light of the new era of US-China competition, communication and collaboration between the two sides towards an improved international security environment may be essential before any meaningful measures that address challenges posed by AI can be reached at the international level.