This blog is based on the regulation subpanel of the Realities of Algorithmic Warfare breakout session, held at the REAIM Summit 2023. Watch the full breakout session here.

The global debate on military applications of artificial intelligence (AI) and autonomy is gradually expanding beyond autonomous weapon systems (AWS) towards the concept of ‘Responsible AI’. Proponents of this framing often argue that AI technologies offer unprecedented opportunities for the defence sphere, while recognising the challenges and risks associated with military uses of AI. The overall narrative is that since applications of AI in the military and weapon systems are here to stay, we must find ways of developing and using these technologies responsibly.

What exactly this implies, however, remains debated. The ambiguity of the Responsible AI concept was visible during the REAIM Summit, held by the Government of the Netherlands together with the Republic of Korea on 15-16 February 2023 in The Hague. The Summit brought together different stakeholders including governments, militaries, researchers, the private sector, and civil society to consider opportunities and risks associated with military AI, as well as the potential for regulation in this area.

Throughout the REAIM plenary and breakout sessions, actors displayed various understandings and interpretations of this term, and crucially, what it means for global governance. There is agreement on the importance of using AI responsibly, for instance by retaining human control over the use of force, but not what this responsibility means exactly. There are particular uncertainties around concrete ways forward to ensure this responsibility. Overall, the Responsible AI frame allows actors to be vague in their proposals for global governance and regulation. It potentially risks undermining efforts for the setting of binding legal norms on the development, testing, and use of AI in the military domain.

What is Responsible AI in the military?

Many portrayals of (anthropomorphic) AI in science-fiction feature humanoid robots and androids which are depicted as beings with their own conscience. This gives the wrong impression that AI applications can be legal subjects, and thus are legally, morally, and ethically responsible for their actions or thoughts.

But as pointed out by Virginia Dignum, Responsible AI is not about AI being responsible. It is all about humans and the “human responsibility for the development of intelligent systems along fundamental human principles and values”. As Dignum writes, “it is socio-technical system of which the [AI] applications are part of that must bear responsibility and ensure trust”. The socio-technical system is formed via human-machine interaction and encompasses not only technical aspects, but also societal, ethical, normative, legal, and political ones. Yet, human-machine interaction is becoming increasingly more complex, especially in the spheres of security and warfare. Defining Responsible AI in the military is therefore also a complex process. It is much more than a catch-all term used for political declarations.

As argued by Vincent Boulanin and Dustin Lewis, the concept of Responsible AI so far “appears to be more or less an empty shell”. Only a few states have published guidelines and clarifications on what they consider to be responsible applications of AI and autonomy in the military. Research by UNIDIR demonstrates that “the global AI policy landscape is still at a nascent stage”, although “gradually evolving”.

This includes the US Department of Defense’s “Ethical Principles for Artificial Intelligence” (2020), “Responsible AI Intelligence Strategy and Implementation Pathway” (2022), and more recently, the US Political Declaration on Responsible Military Use of Artificial Intelligence and Autonomy (2023). The United Kingdom released its guidelines on the Ambitious, Safe, and Responsible use of AI along its Defence AI Strategy in June 2022. The French Ministère des Armées, meanwhile, published an AI Task Force report in 2019, with then Defence Minister Florence Parly declaring that “the path we choose is that of responsibility, of protecting both our values and our fellow-citizens while embracing the amazing opportunities that AI offers”.

While such initiatives are valuable contributions to the debate, more is needed and expected from experts, scholars, and industry. At one REAIM session, Elke Schwarz argued that Responsible AI should be beyond “abstract ideas about what responsible is or could be”. It should also be about considering situations when not to use AI-based systems, especially when these concern life and death decisions. It should also be about having a broader discussion about the uncertainty and unpredictability associated with AI technologies.

At another panel, AI scholar Stuart Russell suggested that the main question should be: will we use military AI in a responsible way? Evidence from both the civilian and military spheres suggests that the answer to Russell’s question, at least in the near future, is no. In that case, Russell said, we need to think about our policy responses, especially in terms of setting binding international norms and regulations.

The implications for Responsible AI for regulation

Currently there is no legally binding, specific international regulation of AI applications in any domain, as discussions about the role of AI technologies in our societies are ongoing. The uses of AI in the military sphere raise particular questions surrounding the roles of humans and machines in the use of force, as well as concerns from different perspectives, including legal, ethical, and security.

However, legal aspects are often in the focus. At the Realities of Algorithmic Warfare session, Jessica Dorsey outlined some of the key questions from a legal point of view: is it possible to ensure legal accountability of machines making targeting decisions? How can humans review algorithms and data in black-boxed AI applications? What happens between the input and output? What is the minimum acceptable level of human control to ensure legitimate military operations?

With the absence of binding international legal norms on AI and autonomy in the military, ongoing practices of armed forces around the world have been gradually transforming the nature of human-machine interaction in warfare, for example in a way that limits the critical reflection space of human operators of weapon systems.

Scholars have suggested that International Humanitarian Law (IHL), which regulates armed international conflict, might be insufficient to address some of the complex issues related to the growing normalization of AI and autonomy in security and defence. Some industry actors agree: at one REAIM plenary session, the Chief Technology Officer of Saab, Petter Bedoire, indicated that governments should provide more guidance about accountability and responsibility, as “IHL is not precise enough”.

Yet, the Responsible AI framing is not necessarily more helpful when it comes to regulation. On the contrary, there is a risk that this trope undermines efforts to move towards global legally binding norms.

As Samar Nawaz argued at the Realities of Algorithmic Warfare session, moving forward with regulatory frameworks requires precise definitions and conceptions of key notions. The definitional debates surrounding autonomy which have been ongoing for years have been delaying the regulatory discussion, both in the civilian and in the military sectors. The ambiguity of the Responsible AI concept gives states the opportunity to subjectively define what is responsible and what is not.

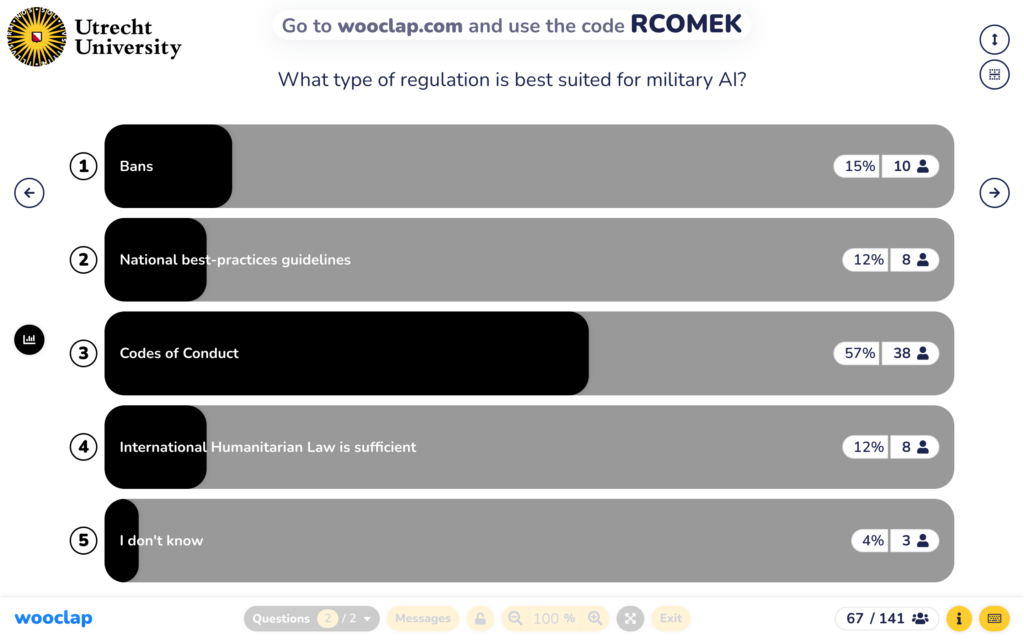

The frame also allows for different interpretations of the policy responses necessary to address the broad discussions related to human responsibility in security and warfare. Based on their definitions of responsibility, increasingly more actors prioritise unilateral declarations over multilateral governance frameworks and common definitions. Many stakeholders argue that voluntary, national codes of conduct, or guides of best practices are best suited for regulating military uses of AI (see figure 1 below).

The Responsible AI trope also allows framing global regulation and norm-setting as a zero-sum game where someone wins, and someone loses. During REAIM, many state officials continued to push the narrative that only some states’ uses of AI in the military are responsible, whereas others’ will automatically be irresponsible. This discourse is fueled by the perception of an ongoing ‘AI race’ and the conviction that it is crucial to develop cutting-edge military AI and autonomy to surpass adversaries.

Political declarations such as the one released by the US have been described as potential “building blocks” towards a more coordinated effort at the international level, for instance common standards which reflect existing military applications of AI. Such measures could be good first steps in broadening the debate beyond futuristic AWS.

But given the vagueness of the Responsible AI trope, unilateral measures risk becoming pathways for states who do not want to sign up to legal rules while wanting to “appear as though they are doing something”. As Tobias Vestner and Juliette François-Blouin write, the REAIM Call to Action “is relatively unspecific regarding concrete measures and prudent in terms of commitment”, symbolizing well this approach of demonstrating responsible unilateral action rather than global norm-setting.

Relying on voluntary measures, however, is insufficient to consider the global implications of military uses of AI. Setting legally binding global norms on responsible uses of military AI and autonomy would be a common good and win-win situation for everyone.

Prospects for global regulation

Recent events have demonstrated some signs of progress towards norms on AI and autonomy in the military. REAIM was followed by a regional conference held in and organised by Costa Rica. In the Belén Communiqué, 33 states from Latin America and the Caribbean agreed on “on the urgent need to negotiate a legally binding instrument, with prohibitions and regulations, on autonomy in weapons systems in order to guarantee meaningful human control”. The Communiqué was also endorsed by Andorra, Portugal and Spain at the 2023 Ibero-American Summit in March.

Meanwhile, the ongoing debate about autonomous weapons at the UN Convention on Certain Conventional Weapons (UN CCW) has stalled since adopting the 11 Guiding Principles in 2019. However, the latest meeting of the Group of Governmental Experts (GGE) on AWS in March 2023 demonstrated the possibility of a more constructive and substantial discussion, generating optimism among delegations and civil society.

Many states seem to converge on the two-tiered approach of both prohibiting AWS which cannot be used in compliance with IHL and regulating other forms of AWS. However, there is no consensus on this way forward, with states such as India, Israel, Russia, and Türkiye being opposed.

The Responsible AI narrative does not facilitate the norm-setting process within the CCW forum. It reinforces the perception that global norms necessarily create winners and losers, which in turn fuels the position that unilateral codes of conduct and political declarations are sufficient to address challenges related to military applications of AI.

As part of Responsible AI, abstract discussions should be complemented with concrete actions from states willing to demonstrate moral leadership and set legally binding rules. States also have other options, including regional initiatives, to launch normative processes and set in stone some agreed principles of military uses of AI and autonomy, even without the immediate sign-up of major military developers.