On 15 June 2022, the UK Government released its new Defence AI Strategy, outlining its plans to further integrate artificial intelligence into its Armed Forces. The strategy includes objectives such as making the Ministry of Defence (MOD) an “AI ready” organisation, exploiting AI “at [a] pace and scale for Defence advantage”, strengthening the UK’s defence AI ecosystem, and shaping “global AI developments to promote security, stability and democratic values”.

With this strategy, the UK visibly seeks to build upon its March 2021 Integrated Review of Security and Defence, which acts as a guiding framework for UK foreign and security policy. The Review, called Global Britain in a competitive age, highlights the perceived importance of growing the UK’s technological power in a time of “systemic competition”, especially with China and Russia. It also refers to AI as a “critical area” where the UK needs to establish a “leading edge”.

The 2022 defence AI strategy goals are also framed as part of the Government’s “drive for strategic advantage through science and technology” and its ambition to become a “Science and Technology superpower by 2030”. The new strategy therefore falls along the lines of previous governmental publications, but it is nevertheless worth examining as the main document guiding the military AI policy of a key global security player.

The narratives of an ‘AI arms race’ and strategic competition are present throughout the document, starting with the foreword to the strategy by the Defence Secretary Ben Wallace. Wallace situates the UK within an era of “persistent global competition”, references Russia’s invasion of Ukraine several times, and rightly highlights the importance of standing up to President Putin’s regime. Within this context, he argues that it is essential to invest in military AI in order to be “relevant and effective” and “meet these various challenges”.

Wallace writes about the return of “industrial-age warfare” in Europe and links it to the importance of modernising the Armed Forces with the help of AI. But is the invasion of Ukraine really the best example to justify this focus on AI? As previously noted on the AutoNorms blog and elsewhere, there is so far little evidence to suggest a widespread use of AI by Russia in its invasion of Ukraine. While this could change, so far reports from the ground challenge the narrative about AI being the future of warfare and the ‘global AI arms race’ being already underway (as argued by Paul Scharre and Heather Roff, the ‘AI arms race’ narrative is not helpful to describe the technological competition which AI is considered to be part of).

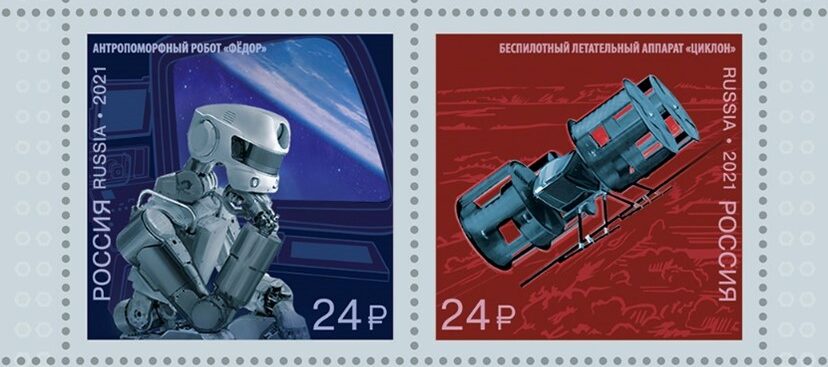

Moreover, Wallace resorts to the narrative of ‘if we do not do it, others will’ by writing, “AI has enormous potential to enhance capability, but it is all too often spoken about as a potential threat. AI-enabled systems do indeed pose a threat to our security, in the hands of our adversaries, and it is imperative that we do not cede them a vital advantage”. The feeling of competition, especially in times of increased confrontation, is driving what Andrew Dwyer calls the UK’s “winner-takes-all” approach to AI in national security, which emphasizes the importance of developing military AI in order to adapt to a world order with competitors such as China and Russia also making progress in these technologies.

These arguments are, of course, not new. They resonate across national strategies and statements of officials in other states, including the UK’s allies such as the United States and Australia, and rivals such as Russia. Headlines about ‘the AI arms race’ also constantly feature in the media. There has been increasing an increasing ‘hype’ surrounding this topic, making ‘global tech race’ and ‘AI’ buzzwords in the discourses of policymakers and analysts and adding pressure to use them in national strategies. In addition, the UK has been facing the challenge of redefining its post-Brexit foreign and security policy and situating itself in this environment of geopolitical competition and tensions that have intensified in recent years. The objective of being a science and technology superpower through investment in AI is one way for Global Britain to position itself and demonstrate its intention to catch up with the US, the current leader in this field.

The importance of excelling in the “intense” AI competition is the dominant narrative which trumps all other debates. Although some issues associated with the integration of AI into weapon systems and military command and control, including legal, ethical and malfunction concerns, are mentioned in the strategy, the implications of this are not explored in depth. For instance, Wallace recognizes that uses of AI can raise “profound issues”, but adds, “but think for a moment about the number of AI-enabled devices you have at home and ask yourself whether we shouldn’t make use of the same technology to defend ourselves and our values”.

The phrasing presents the category of AI as a unified actor and does not tackle the different subfields of computer science that are associated with AI, suggesting that all AI-enabled devices will be beneficial from a security point of view. Such a suggestion ignores the controversies related to the uses of AI and machine learning-enabled devices or systems in a civilian context. Researchers have been pointing out issues related to the current uses of algorithmic models and especially the data they use. The data can be incomplete, low-quality, or biased. The problems related to the “AI-enabled devices” we use at home raises questions about AI-enabled technologies’ usefulness both in terms of defence (due to system malfunctions, for instance) and democratic values that the UK seeks to promote (due to many algorithmic models favouring certain groups of people based on human biases and the data they have been fed). Such debates should not be overlooked simply by referring to the AI competition discourses. Unfortunately, they are not explored in depth in the strategy.

At the same time, the strategy also mentions the intention to develop military AI in line with the UK’s values, to lead by example and promote a “safe and responsible” use of military AI, as well as avoiding a “race to the bottom”. It emphasizes that the UK will be using military AI to uphold democratic values, while adversaries are expected to “use AI in what that we would consider unacceptable on legal, ethical, or safety grounds”. Will this attempt to be a leader in ethical applications of technology translate into leadership in terms of regulation of AI? If the UK plans to lead by example with an ethical approach, it will also need to clarify its stance on concrete ways of setting international norms and standards in this area, for instance at the ongoing debate on autonomous weapons at the United Nations.

AI and autonomy in weapon systems

The strategy touches upon the UK’s position on autonomy in weapon systems, (lethal) autonomous weapon systems (LAWS), or so called “killer robots”. In a policy statement published along with the strategy, the MOD states that weapons which identify and attack targets “without context-appropriate human involvement” are not acceptable. The UK Campaign to Stop Killer Robots welcomed this stance, which differs from the MOD’s previously ambiguous definition of autonomous systems as having “higher-level intent and direction”. As the Campaign also rightly noted, the exact definition of “context-appropriate human involvement” remains unclear and the UK’s policy on whether this concept could or should be codified in legal norms is also vague.

The MOD recognizes that global governance for LAWS is challenging but maintains its position that the UN Convention on Certain Conventional Weapons (CCW) remains the most appropriate forum to “shape international norms and standards” about ethical uses of military AI. The deadlocked CCW forum is now considered by many analysts and civil society actors as an improbable avenue to reach a consensus on norms and standards in relation to LAWS. At the last meeting of experts on this issue, the Russian delegation opposed holding talks in a formal format, preventing progress in the discussion.

In these circumstances, it remains unclear from the strategy what kind of role the UK intends to play in reaching consensus with other CCW states parties. The document mentions respect for current international humanitarian law, as well as codes of conduct, positive obligations and best practices, but there is no indication on whether and how the UK intends to be a norm-shaper via instruments of international law, such as a potentially legally binding instrument regulating autonomy in weapon systems.

In May 2021, the AutoNorms team submitted evidence to the UK House of Commons Foreign Affairs committee, recommending that the Government should capitalize on the opportunity to spearhead global governance on military AI, including at the CCW, but also within NATO and other alliances. The new Defence AI strategy only partially reflects the UK’s intention to do this. The Government continues to portray technology and international security through the prism of intense global competition and strengthen the narrative of the perceived necessity of investing into military AI because competitors are doing it as well. While this represents an attempt to situate Global Britain as a leader in developing AI in a so-called “ambitious, safe, and responsible” way, the UK can better advance its interests and leadership goals by putting forward concrete plans in shaping international regulation, whether at the UN CCW or elsewhere, and not sidelining the concerns related to military AI uses behind the competition narrative.